The role of radiologists in the days of AI – Users? Supervisors? Interventionists?

In 2011, by beating human at the game Jeopardy, Watson provided the world a glimpse the potential of artificial intelligence (AI). Fast forward only a few years, the rapid development of deep neural networks has further expanded human’s imagination of AI. Now, people wonder if AI will ever replace physicians. Perhaps start with radiologists?

Deep learning’s recent successes have mostly relied on exploiting the fundamental statistical properties of images, sounds and video data. In addition, for computers to learn well, there must be sufficient data. Diagnostic radiology, which relies on radiologist to read images (X-ray, CT, MRI, etc) seems like a perfect testing ground: in 2015 alone, there were roughly 800 million multi-slice exam performed in the US, generating roughly 60 billion medical images1. In addition, each report comes with radiologists’ opinions and formal diagnoses. In 2015, IBM purchased 30 billion of medical images for Watson to “look at”2. While IBM is on its way to develop the most powerful and fastest radiologist ever, image recognition has garnered results in a field that also heavily relies on image recognition. Earlier this year, computer scientists and physicians at Stanford University teamed up to train a deep learning algorithm on 130,000 images of 2,000 skin diseases. The result program performed just as well as 21 board-certified dermatologists in picking out deadly skin lesions3.

This naturally argues for the relevance of medical professionals, especially radiologists. But what does the hospital workflow of diagnostic radiology look like currently in the hospital, and what changes will AI bring to the system?

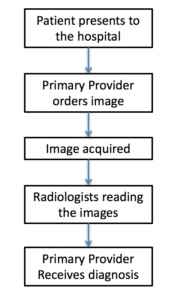

Figure 1. Current radiology workflow

Figure 1 shows the current workflow of a radiologist. First patient shows up in the hospital, and the primary provider (emergency room physicians, hospitalists, etc) decides an imaging study is warranted. Image is then obtained, and sent to the radiologist’s reading room digitally, where radiologists will read and diagnose based on the images. The results are sent to the primary provider, who will make decisions accordingly. In this system, radiologists are often the bottleneck, as there are often many more primary providers than the on-call radiologist.

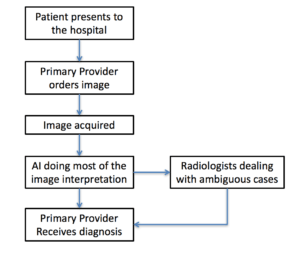

Figure 2a. First step of AI integration: Radiologist as a user of AI

Figure 2a is likely first step of AI integration into radiologists’ workflow. In most hospitals, radiology division is comprised of different anatomical departments – chest, abdomen, neuroradiology, etc. Although there are many medical imaging AI companies that are developing AI solutions via a general route, more and more companies are specializing in particular areas (e.g. Imbio for lung imaging). In addition, there are specific pathologies on images that are notoriously difficult for radiologists to track but possibly simple for AI, such as lung nodules. The logical first step of AI integration, is to allow radiologists to use AI to solve difficult tasks so radiologists can be more efficient. This will reduce the congestion at the bottleneck and reduce the time it takes for providers to receive a decision.

Figure 2b. Second step of AI integration: Radiologist as a supervisor of AI

Figure 2b reflects a possible second step of AI integration. As AI continues to improve, it may surpass radiologists in most aspects of image reading. However, radiologists might still be superior in complex or ambiguous conditions. For example, in the hospital, radiologists often call the image-ordering provider to clarify certain patient history in order to narrow down the number of possible differential diagnosis. Therefore, a subset of images read by AI may still require supervision.

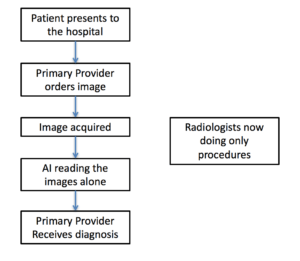

Figure 2c. Last step of AI integration: Radiologist now only doing procedures, independent of AI

Figure 2c reflects possibly the ultimate workflow, effectively eliminating the need for radiologists under the current workflow architecture. AI will run the department of diagnostic radiology. It is, however, important to point out that in most institutions nowadays, diagnostic radiologists also perform radiologic-guided procedures, such as biopsies, injections, drainage, etc. Comparatively, these procedures are not as easily replaced by AI. This may result in radiologists becoming interventionists as their reading counterpart is taken over by AI.

Last, it is important to do a sanity check of AI integration. How likely will a patient be comfortable having their images read by a computer, even with the same accuracy? How can primary providers interact with AI? In addition, we have to speculate how the legality of AI integration will work out. Can AI be sued if it missed a diagnosis?

Fascinating!

In my mind, the problems of liability and innovation are the two largest. In all fairness, it will take some time for patients to feel comfortable being diagnosed by a machine, but once AI has been proven to be just as, if not more, effective than radiologist at detecting abnormalities, the discomfort should go away.

The problem that AI poses to the judiciary system is far greater, as you suggest. Can you sue a hospital if its AI has misdiagnosed you? Who is ultimately responsible: the hospital or the firm behind the AI? Should there be a radiologist approving each AI diagnosis? If so, how much more efficient can we actually get? It is critical that patients retain their recourses in case of malpractice, but to a certain extent will it matter? Physicians today are extremely careful when dealing with the diagnosis because the negative consequences of messing up are large enough. Will a computer care as much? Likely not.

Then, there is a question about innovation in imaging technologies. If robots are the primary users of today’s technology, how can we ensure that tomorrow’s technologies are being developed? I am concerned that the limited research capital that exists will be directed at improving AI’s ability to read today’s images, as opposed to R&D focused at getting better images. Perhaps a bit of both would be adviseable?

I think the last question you raise is critical: legal accountability. Jasmyn’s comment correctly identifies some follow-on questions from identifying some of these concerns. My perspective on the second-order effects of this transformation begins from the premise that medicine is different to producing widgets in a factory; any ‘failure rate’ or ‘quality control’ issue in the process is unacceptable and needs to be eliminated, rather than being something that can be acceptably priced in to the cost of doing business.

Once the failure rate of these diagnostic tools have been established, the likely outcome is that hospitals will use their asymmetric power over patients to inform them about this failure rate and make them waive liability for the risks of this type of diagnosis – and accept that failure rate as the norm, rather than investing more and more to get diminishing returns on decreasing the failure rate.