As Soon As You Publish A Map It’s Outdated: How Google Maps Uses Imagery And Machine Learning To Keep Their Maps Relevant

In a landscape where cities, roads, and businesses are constantly changing and evolving, how does Google Maps ensure that they are providing their users with the most up-to-date information?

Google Maps, a dynamic mapping service utilized by over one billion users, provides directions, real-time traffic data, and information on businesses through its online and mobile platforms. Google Maps is constantly updating their service, with data collected through satellite and Street View imagery as well as external individual contributions in an effort to provide relevant information to their user base [1].

Google Maps’ Ground Truth Initiative

In 2008, Google made the decision to utilize their own in-house data to improve the quality and detail of their mapping inputs. As part of the Ground Truth Initiative, Google created a program designed to combine authoritative data from external organizations with the data it gathers itself. The GPS data and images collected by Google’s Street View fleet is added into internal database. This has resulted in the collection of information for over five million unique miles of roads in 3,000 cities within 40 countries [2].

So how does Google comb through the 80 billion images in its database to identify new or updated mapping information? Through the use of deep learning and artificial intelligence, the Ground Truth team can extract information from the geo-coded images to interpret evidence from street signs and building facades [3]. As shown in the graphics below, images collected by Street View vehicles are not always clear, with discrepancies in the image angle, clarity, and completeness. Therefore, Google must utilize machine learning to circumvent missing or unclear information.

Google Street View imagery inputs [4]

Google Street View imagery inputs [4]

In an effort to extract relevant information and avoid visual clutter, Google has developed a neural network model to accurately forecast text outputs based on spatial attention software and established predictive patterns. A study of this technology, analyzing the ability to correctly identify French Street Name Signs, resulted in text extraction procedures that were able to achieve 84.2% accuracy. The model was able to make informed assumptions about variations in spelling and abbreviations used for these street signs [4]. This technology can also be applied to identify updated roadway markings, traffic restrictions, and business names.

What Comes Next?

As data collection from Street View continues to exponentially increase and the machine learning models improve in accuracy, Google needs to determine ways in which to continually improve their platform for Maps users.

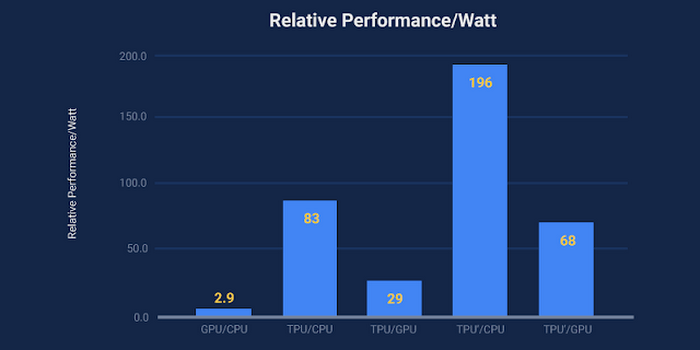

In the short term, Google intends to improve their hardware capabilities to compliment the innovations in the software realm. Tensor Processing Units (TPUs) are machine learning accelerators that have been recently adopted within Google and are able to “deliver an order of magnitude better-optimized performance per watt for machine learning.” [5] The image below illustrates the relative performance per watt of TPUs compared to conventional processing units.

Comparative performance of processing units utilized by Google [6]

With continuously improved hardware, Google would be able to perform more operations per second and apply machine models more quickly through its Maps division as well as other applications. In a recent announcement, Google confirmed that they are releasing a new iteration of its TPU, version 3.0 [7].

Looking at a broader horizon for imagery and data analysis innovations, the increased presence and enhanced technology behind autonomous vehicles (AV) has the potential to dramatically improve the quantity and quality of data inputs for Google Maps. Lidar sensors are 3D depth sensors that have been utilized to build better 3D infrastructure models, informing AVs of surrounding geographical features so that a vehicle can navigate more safely [8]. As the demands for AV information continues to intensify, the capacity of the 3D modeling will also improve, thereby providing an enhanced data set to be processed for updated mapping.

As Google Maps continues to internally gather data, I would recommend that they also consider utilizing images derived from the general public. Currently, their data sources are limited to what they are able to collect via Street View and through individual contributions; however, millions of images are made available on the Internet through social media and other platforms every day. While a caveat of utilizing this data would be ensuring the validity of the source and the concerns of individual privacy, this information could be used to expand Google Maps’ knowledge into areas that had not previously been within their reach. Alternatively, within a shorter time frame, Google could solicit voluntary information from regions where there is a gap in data in an effort to complete their mapping network.

Future Considerations

One limitation of Street View image processing is the necessity to manually verify the deep learning model outputs. As text extraction and recognition technologies continue to improve, could the Google Maps platform be updated in real time, and can Google Maps remove the human element of checking and correcting the maps?

(784 Words)

Sources:

[1] Ibarz, Julian & Banerjee, Sujoy. (2017, May 3). Updating Google Maps with Deep Learning and Street View. Retrieved from https://ai.googleblog.com/2017/05/updating-google-maps-with-deep-learning.html

[2] Hartley, Matt. (2012, September 27). Ground Truth tells it like it is. Retrieved from https://search-proquest-com.ezp-prod1.hul.harvard.edu/businesspremium/docview/1081218472/F1A16EF4606D424DPQ/1?accountid=11311

[3] Mallick, Subhrojit. (2017, May 5). Google Maps gets a dose of machine learning. Retrieved from https://in.pcmag.com/google-maps-for-mobile/114355/google-maps-gets-a-dose-of-machine-learning

[4] Wojna, Zbigniew, Gorban, Alex, Lee, Dar-Shyang, Murphy, Kevin, Yu, Qian, Li, Yeqing, & Ibarz, Juian. (2017, August 20). Attention-based Extraction of Structured Information from Street View Imagery. Retrieved from https://arxiv.org/pdf/1704.03549.pdf

[5] Jouppi, Norm. (2016, May 18). Google supercharges machine learning tasks with TPU custom chip. Retrieved from https://cloud.google.com/blog/products/gcp/google-supercharges-machine-learning-tasks-with-custom-chip

[6] Jouppi, Norm. (2017, April 5) Quantifying the performance of the TPU, our first machine learning chip. Retrieved from https://cloud.google.com/blog/products/gcp/quantifying-the-performance-of-the-tpu-our-first-machine-learning-chip

[7] Newstex. (2018, May 9) Tech.pinions: Google creates some spin with TPU 3.0 announcement. Retrieved from https://search-proquest-com.ezp-prod1.hul.harvard.edu/businesspremium/docview/2036252097/E09E60A68A2F4D52PQ/5?accountid=11311

[8] Amadeo, Ron. (2017, September 6) Google’s Street View cars are now giant, mobile 3D scanners. Retrieved from https://arstechnica.com/gadgets/2017/09/googles-street-view-cars-are-now-giant-mobile-3d-scanners/

Thank you for sharing this piece on machine learning at Google. When reading your second question about removing the human element of checking the map, I started to wonder about circumstances where the human element is still vital. For example, local residents in a city may have a better sense of the safety on a particular street that software and hardware may not be able to capture, but that information might be critically important when Google Maps is recommending a route for a user. As a result, I think it will be important for Google to determine what are the major information gaps that its technology is not capturing and focus on closing those gaps instead of just finding ways to replace the human element.

Impressive accuracy and performance for real-time results. It seems like they are investing heavily in increasing processing speed, but Street View collection still requires a car/bicycle/backpack with a camera taking pictures. In addition to removing the human element in verifying model outputs, could the company automate their street view data collection as well? Will they have to wait for AVs to be on every street for it? Nice read!

I think your recommendation to use images made available by the public for Google Maps is a great one! This would allow for maps to function based on open innovation, which would allow for updates to be made to Google Maps when a Google Street View car is unable to pass through that area to gather updated images. I have noticed recently that Google is requesting additional information from me as I use Google Maps to improve the accuracy of their traffic estimates (e.g., asking questions such as: “How busy was the bus you rode to school this morning?”). This shows that Google is attempting to use crowd-sourced information to improve their estimates of traffic, which they could also apply to their mapping accuracy (by asking questions about specific locations to people who are frequently in that area).