People Analytics: A Service vs. Platform-Driven Model

How should companies structure their people analytics team? A thought-provoking product-centric model for building people analytics talent.

Original Post: People Analytics Platform Operating Model (Medium)

In our class discussions thus far, we’ve spent a good deal of time discussing what people analytics has the potential to be within companies, or why companies should invest in the function. However, we’ve spent little time discussing the operating model – in other words, the how:

- How should a people analytics team structure itself to meet the needs of its organization?

- How should it hire people analytics talent accordingly?

- How is the operating model of people analytics teams changing over time?

This problem of operating models is one people analytics practitioners have really begun to grapple with. Many of us who come from the function have, frankly, stumbled into it. As we’ve observed in case studies of people analytics teams, we often find that a people analytics function starts with a curious data scientist playing in a sandbox of people data, or a non-HR business person interested in being more data-driven in their decision-making around human capital. Initial ideas and hypotheses from these curious individuals are tested with data, and the function grows from there.

Such discussions of people analytics focus on what’s really the “fun” part of people analytics – e.g., the research, the analytics, the moment of satisfying one’s curiosity by plowing through a treasure trove of data in search of a “Eureka!” moment, where you bring forth a Great Insight from your Excel or R notebook to change the hearts and minds of your executive team pondering a major people decision. As satisfying as these case narratives may be, they fail to acknowledge the full cycle of operations required to make that moment of Great Insight effective. After getting our first taste of building models and evaluating insights from them, we want more. Therein, we begin to realize the real investment required to make a people analytics team run.

That investment is all the time required for need-defining, data collection, and data processing prior to any analysis being run, and afterwards all the time spent into deploying that insight to ensure it’s operationalized both correctly and effectively (and in a manner that ensures the organization comes back asking for more insights). A data science function might recognize these variate steps as parts of the Cross Industry Process for Data Mining, or CRISP-DM cycle. As you can see, the “insight” moment only appears somewhere between the modeling and evaluation steps shown below.

As people analytics functions have matured into more positive, sophisticated reputations, so have their perspectives on how best to design and execute their people analytics functions as they’ve grown in size from just a single analyst with an industrial-organizational psychology or data science background into full-fledged teams. No longer are sole individuals expected to handle every aspect of the CRISP-DM cycle; rather, people analytics leaders are being asked to hire talent that specializes in specific aspects of the data science cycle above. And with no prior models of people analytics teams to learn from, only know are they starting to question what’s the “best” way to structure their operating models.

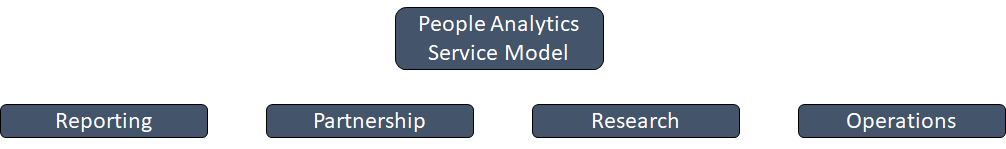

This is where Richard Rosensow’s Medium blog post enters the conversation. He argues that most people analytics teams have evolved according to a service-based paradigm, wherein the team is organized according to the basic functions outlined below:

- Reporting: A team of analysts close to an HR system (e.g., HRIS) who produces standardized data reports on a regular basis (mostly descriptive statistcs) that address the vast majority of internal clients’ people data needs.

- Partnership: A team of business partners with consulting-esque skillsets who provide “white glove” service to executives and other VIPs, ensuring data insights are deployed effectively and ultimately actionable.

- Research: A team of data science-y / IO psych PhD types who work on regressions, machine learning, AI, and whatever other type of advanced analysis that produces.

- Operations: The “project management” side of the team to work on rolling out new systems of or people analytics programs (e.g., running engagement surveys).

This model fundamentally sees itself as a service-based model. An internal client has a need, and they work with the appropriate data reporting/partner/researcher/project manager to get that need met.

The problem with this type of model, Rosenow argues, is one of scalability. Ultimately, a service model like this one, based on treating each people analytics problem with white-glove attention, is ultimately limited by ratios: i.e., the ratio of number of clients to number of analysts available. This model, he argues, becomes either prohibitively expensive from a headcount perspective, or simply unwieldy from the number of analysts it requires.

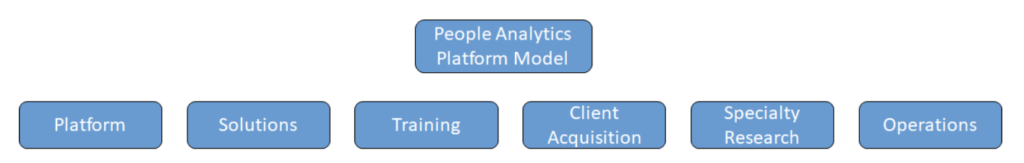

Here’s where Rosenow comes in with an alternative perspective: one that argues for a platform-based model of people analytics. He argues that people analytics teams must make the transition that all data engineers, data scientists, and data product managers are all too familiar with. In short: build a data platform that stores all the data, build products that surface it to the rest of the company, and then have talent either sell or tailor that data to the rest of the organization. He argues that he believes this model is becoming more popular, judging by the increased number of job descriptions for “product managers” and “UX researchers” he’s seen for people analytics teams at companies like Apple and Amazon.

Already, one can see the similarities such a model has with how a traditional tech startup focused on data might operate. Engineers and analysts help build a platform, and product managers and UX researchers develop solutions to ensure the data on the platform is helpful to its users. Additional team members focus on training users on the platform as well as working on client acquisition to “sell” the virtues of the platform and people analytics at large (effectively, doing sales & marketing). Specialty research provides the “white glove” service for the few users who need extra last-mile tailoring, and operations ensures everything is running.

At first pass, certainly the model is brilliant, especially for those of us practitioners who are all too familiar with the frustrations that we can’t be more scalable and the frictions that come with trying to protect an over-extended people analytics team. Scalability of support is truly a holy grail of people analytics teams. After all, this is what many HR tech startups are trying to do: essentially take a company’s data and build a platform externally, with all the above functions effectively outsourced, and only the most important insights brought in.

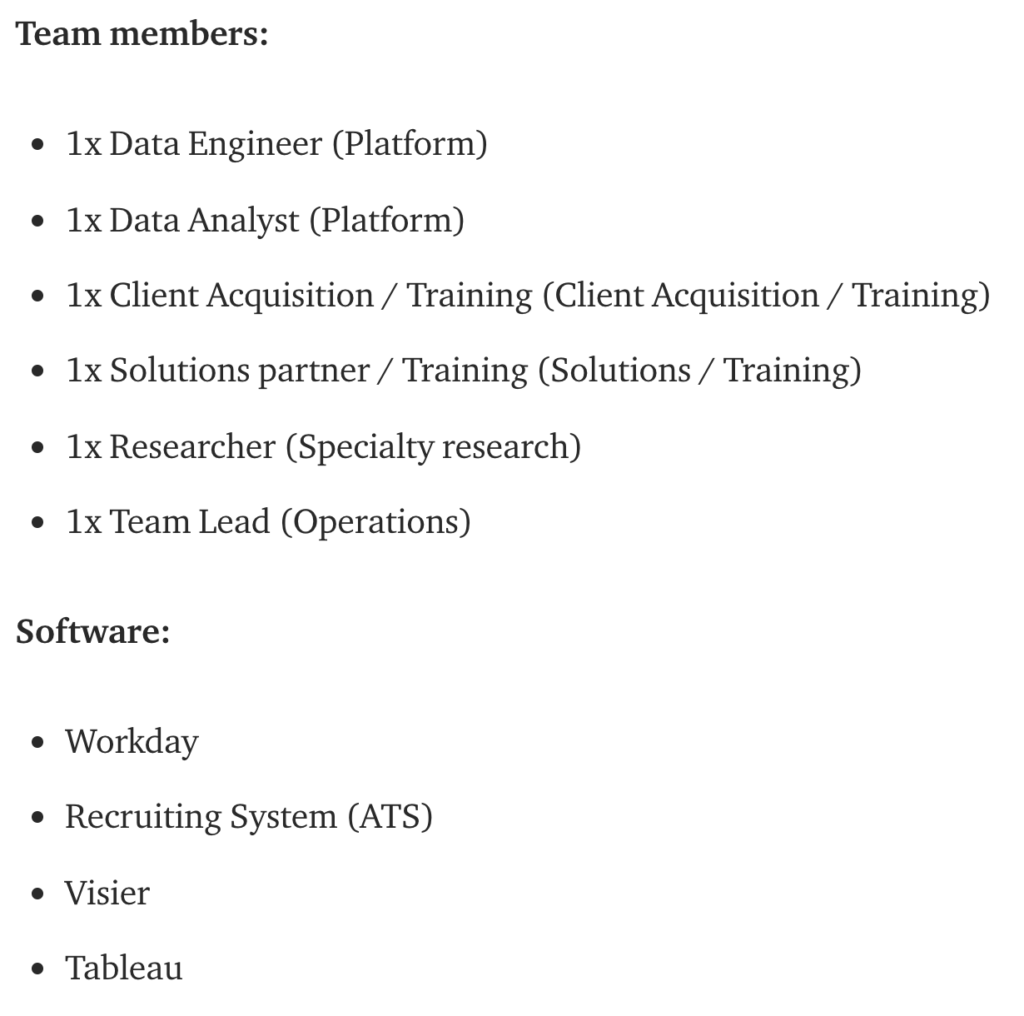

My issue with Rosenow’s thought-provoking suggestion, though, is based around what I believe to be an underestimation of the (wo)man power required to make this kind of team function that ultimately undermines the concerns around scalability it’s meant to address. Let’s take his base-case proposal for the talent such a team required, pulled from his article:

We see his proposed team requires 6 members – of which really 2 are analysts/engineers working on the ins-and-outs of the data. What this means is you effectively have 2 team members managing all aspects of the CRISP-DM cycle, with support really coming in only for defining business need at the start and deploying insights at the end.

Sure, build the right platform and ideally 2 people is all you need. But how difficult is it to get to that point where a platform is fully fleshed out? How much harder is it when you consider that a platform has a multitude of stakeholders across different functions – inside and outside HR – with differing needs? To name a few:

- Recruiting wants recruiting pipeline data pulled from an ATS

- Workforce Planning & Talent Management wants data on performance management, succession planning, and network analyses

- Learning & Development wants data on managers, training effectiveness, etc.

- Workplace Culture and D&I teams want data on engagement and

- Total rewards teams want to understand impact of compensation decisions

- Facilities teams need to know impact of physical workspaces

- Executives & managers want data on attrition and engagement and recommendations on what to do

- Sales & retail leaders want people data combined with site/individual-level revenue metrics

- Operations leaders want people data combined with efficiency metrics

- HR Business Partners need support on all of the above

What’s important to note is that the data needs and questions of these teams can change all the time. With new re-organizations, shifting business priorities, and the like, the data needs of one day might be completely different from those 6 months down the road.

I bring this up not to say that the service model isn’t subject to the same varying requests from multiple stakeholders. Rather, I specifically think it’s because of these wide varying needs that a service model has become so popular – the white glove service that a well-staffed, high-touch team provides can ensure that all data needs and questions across these functions are satisfied. Each stakeholder needs reporting analysts, partners, and researchers who understand their specific questions (and specific requisite datasets accordingly) to fully get into the nitty-gritty of the nuances of their problems, each of which is slightly distinct from the problems of the next stakeholder function. It’s why people analytics teams so easily swell in size as the popularity of the people analytics function grows.

That’s not to say that a platform model can’t address the same needs – it’s just that I think that there’s some extent to which platform models themselves need to maintain a high stakeholder-to-analyst ratio, at least at the outset. Creating the platform requires deep understanding of each data system pulling into it – of which there are many! – including all the many data fields and tables that exist within each system – of which there are many more! What’s more, these are people data we’re talking about: ensuring full accuracy of that platform requires understanding the very human nature of each data row, and understanding that single error can’t be chalked up to a mis-pull, but rather, is a potential direct impact on an employee’s experience. And per the point of shifting human capital structures within organizations, it’s not like these data structures stay static – they require constant care. In this scenario, I worry when you have only 2 data engineers/analysts trying to handle most of the CRISP-DM cycle, with the rest of a people analytics team either trying to build a product or sell a function prematurely.

In brief, I grow concerned by simply describing people analytics as a “product” or “platform” does not immediately mean it will become scalable. That’s not to say that I’m all negative on the idea. In fact, I think it’s quite thought-provoking, in the sense it makes the case for what I’ve long said of many HR data startups: instead of thinking you can do it all externally/outsourced, bring the full startup’s capabilities in-house so that they can understand the intricacies and idiosyncrasies of your company’s unique culture and unique data. I do think companies should aspire to at least build a “data lake”-esque platform that at least makes reporting easier, and that partners trained in the virtues of both using data and selling the function’s organizational value are important.

If anything, perhaps though my concerns with Rosenow’s assessment come from my own background in startups, where people analytics is built by a resource-and-talent-deprived team amidst an ever-changing ambiguous environment. In those circumstances, I’d argue a service-based model is still needed as you get the function up and running. In more mature stages of companies where resources are abundant and data needs are more predictable and stable, then yes, shifting to a platform-based model may make sense. But to assume any people analytics team can get there without considering first a prerequisite service-based step may lead a team to get ahead of itself too quickly.

Hi Jason! Love this thoughtful piece on the staffing model for people analytics teams – the exact question I’ve been wondering as I navigate various entry points for the field.

From what I learned from my friend working as a project manager for Facebook’s people analytics team, they do follow the “service-based model” with researchers, data analysts, business partners, and project managers. I am excited to see the training & solution / UX roles in the platform-based model that focus on informing the broader business about the capabilities of people analytics in addressing not only the traditional employee lifecycle questions (recruiting, retention, etc.) but also the core of human capital productivity and leadership development, etc. The integration between business performance data and HRIS data definitely requires more stakeholders to understand and endorse the significance of a strong people analytics team.

Meanwhile, I do agree with you that it’s still an early stage for most companies to grow into the platform-based model as they may not have the data infrastructure or integrity to support broader business requests, and the traditional model could be a good starting point for proof of concept. Thanks again for sharing!

It is interesting to read up on how people analytics should structure and operate within an organization.

I understand a lot of private businesses see this as an opportunity to secure more competitive advantages in their industries. To push your thoughts further, may I ask, can can a similar setup be adopted within the public sectors?

Across the world, there is a decrease in the confidence level in public institutions. So many root causes have been hypothesize but one that draws similarity here is the inability of government offices to attract and retain the right talents for various roles. If this is a possible root cause, then people analytics could be a possible solution.

It is possible for the public sector to have a similar setup to the one described above, possibly a centralized organization setup in the governor’s office in other to utilize its resources across multiple departments.

Super interesting, thanks for sharing!

Instead of debating a service-led or platform-led model, should people analytics be considered a separate function in an organization at all? Ideally, it’s just another part of the infrastructure of a company – similar to how customer or financial data and analytics are used.

You’ll need engineers to build the core infrastructure platform, just as you would for the customer and financial data. You can have data scientists work with the engineers to build tooling and find significant predictors and benchmarks, but it may be better to use third party companies who are using larger datasets and/or academic research. I understand that this wouldn’t solve every bespoke people analytics request, but I imagine 80% of issues for managers and employees could be solved in this way – hiring practices, employee engagement, network analysis, sentiment, etc. The HR team could still use this data platform and create bespoke analysis to help make broad structural changes in people management, but teams across the board could use this tooling to make better decisions on a day-to-day basis.

I think this would solve for the resourcing and multiple stake holder issues you brought up. It would also push people decision making down to managers and employees, which helps get buy-in in a decentralized way.

Let me know if you have any thoughts!

Hi Jason – super interesting. I love the super detailed walkthrough of how to do this in practice. I think that one additional benefit – or just a reframe of the argument – of the introduction of platform thinking into people analytics is the benefit to not only scale but scope.

To elaborate, people analytics is viewed as a driver of HR’s transformation into a more strategic function. Presumably more and more operating decisions are being invoked by potential insights that can be derived from a people analytics system, well beyond the current scope of HR’s decision-making. Taking a platform approach as described in the blog and by you enables a shared set of technology and data assets to be re-oriented to new problems in the organization and by stakeholders that might not currently be thought of as key “people operations” decisionmakers.

This new thinking about scope — enabled by a platform approach — is difficult in practice but could radically increase the value of people analytics within the enterprise!