Improving Representation in Media through Machine Learning

Disney and Universal partner with Geena Davis Institute on Gender in Media to use AI to improve representation in development process.

CONTEXT

While our nation has grown increasingly diverse, there are several aspects of society that have not kept apace, including the entertainment industry. Academy Award winner Geena Davis has set to correct this imbalance through the formation of the Geena Davis Institute on Gender in Media. The Institute’s aim is to work “collaboratively within the entertainment industry to engage, educate and influence the creation of gender balanced onscreen portrayals…” (https://seejane.org/about-us/). The Geena Davis Institute tackles this issue with a “data-driven” approach, including the recent GD-IQ: Spellcheck for Bias, a machine learning software developed to flag and remedy under- and misrepresentation in media.

ISSUE

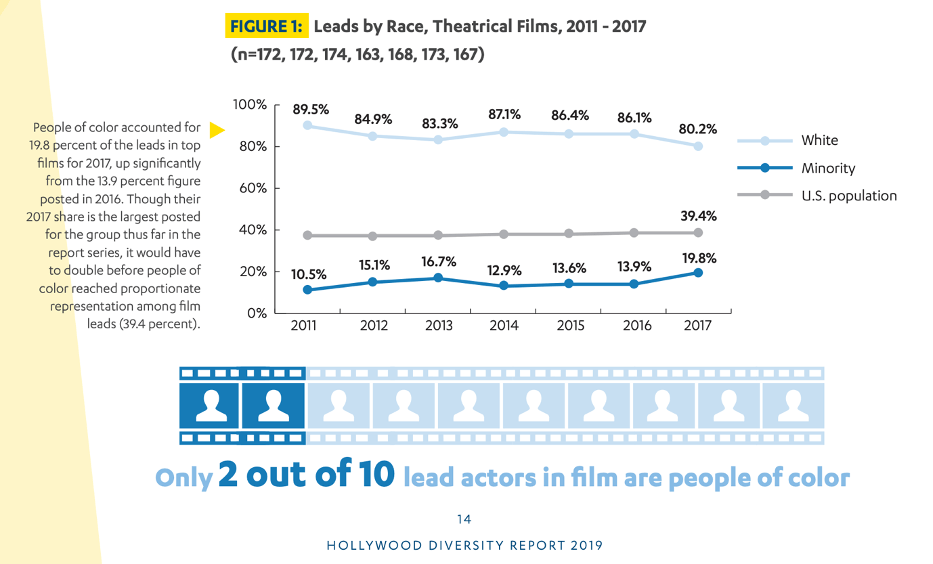

The entertainment industry fails to mirror our world both in front of and behind the camera. For example, the UCLA Hollywood Diversity Report illustrates that white people are overrepresented in lead roles in theatrical releases by 2x.

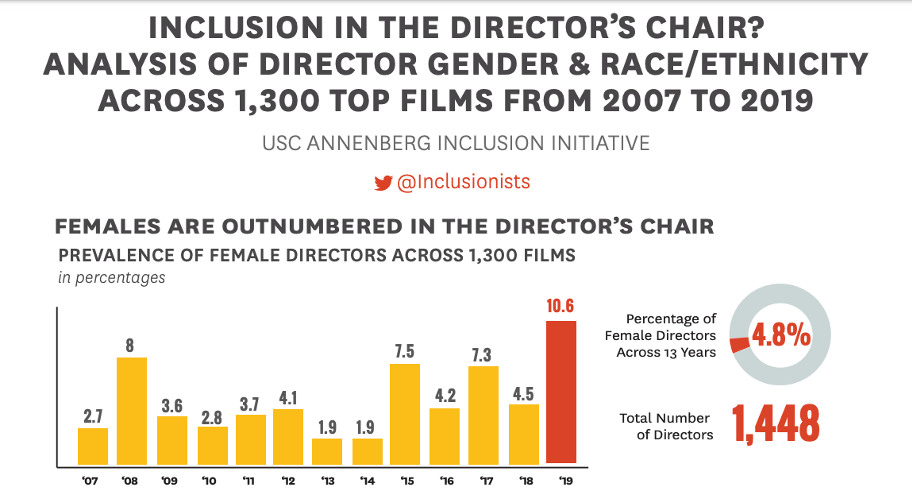

Behind the camera, it is a similar story. In fact, the USC Annenberg Inclusion Initiative found that while metacritic scores of films by male and female directors show no statistical difference, male directors outnumber female directors by 20 to 1 (http://assets.uscannenberg.org/docs/aii-inclusion-directors-chair-20200102.pdf).

http://assets.uscannenberg.org/docs/aii-inclusion-directors-chair-20200102.pdf

AI SOLUTION

The Geena Davis Institute employs a multi-pronged approach towards remedying this deficiency, including GD-IQ: Spellcheck for Bias, developed in partnership with USC’s Viterbi School of Engineering to help companies identify their own under- and misrepresentation of different cohorts. The tool identifies and quantifies the number of representations in video content, as well as the quality of characters across identities. Shri Narayanan, “USC engineering professor and director of Signal Analysis and Interpretation Laboratory (SAIL), said that the tool will use artificial intelligence technology to understand various aspects of the story that could reveal patterns of biases” (http://www.uscannenbergmedia.com/2020/02/25/ai-technology-from-the-usc-viterbi-school-work-to-increase-representation-in-film-and-media/). The tool “rapidly tall[ies] the genders and ethnicities of characters… the number of speaking lines the various groups have, the level of sophistication of the vocabulary they use and the relative social status or positions of power assigned to the characters by group” (https://www.hollywoodreporter.com/news/geena-davis-unveils-partnership-disney-spellcheck-scripts-gender-bias-1245347). More specifically, the “patented machine learning tool” identifies cohorts including “characters who are people of color, LGBTQI, possess disabilities or belong to other groups typically underrepresented and failed by Hollywood storytelling” (https://www.hollywoodreporter.com/news/geena-davis-unveils-partnership-disney-spellcheck-scripts-gender-bias-1245347).

In the tool’s first pilot, the Geena Davis Institute on Gender in Media partnered with Walt Disney Studios in late 2019 to assess early stage development (manuscript) development. As Davis says, “I’m very proud to announce we have a brand new partnership with Walt Disney Studios using Spell Check for Bias. They are our pilot partners and we’re going to collaborate with Disney over the next year using this tool to help their decision-making, identify opportunities to increase diversity and inclusion to the manuscripts that they receive. We’re very excited about the possibilities with this new technology and we encourage everybody to get in touch with us and give it a try” (https://screenrant.com/disney-movie-scripts-gender-bias-geena-davis/). By focusing on early stage development, Walt Disney Studios can flag issues in their content before spending more development money. And, by flagging early in the creative process, the course-correction can be fully integrated within the character and story development, instead of trying to shoehorn solutions in later, which may also seem inauthentic.

A few months later, the Geena Davis Institute on Gender in Media rolled out a second pilot, this time with Universal Entertainment Group to assess their Latinx representation. Universal Pictures president Peter Cramer lauded this partnership in the organization’s “‘strides throughout the production process towards more inclusive storytelling for all underrepresented groups, identifying additional resources to help improve and increase Latinx portrayals onscreen,’” (https://variety.com/2020/film/news/universal-geena-davis-usc-latinx-representation-1203507849/). They again focus on early stage development, aiming to promote “more authentic Latinx onscreen representation and casting opportunities” (https://deadline.com/2020/02/universal-geena-davis-institute-and-usc-spellcheck-for-bias-resource-latinx-representation-inclusion-diversity-1202862892/).

While USC and the Geena Davis Institute on Gender in Media are not releasing specific information on how the tool works, one can imagine that since it is a machine learning tool, that it will improve over time. Since machine learning tools can “improve[sic]…over time based on additional data or new experiences,” (HBS Case 9-619-014: Zebra Medical Vision) the more scripts it ‘reads,’ the better the tool will be at identifying and quantifying representations. And, the more studios and creative partners that engage with GD-IQ: Spellcheck for Bias, the better the tool will be.

RISKS AND CRITICISMS

As is the case with any coded tool, the machine learning tool itself is only as good as the artist/programmer creating the code. And, specifically, for a tool that is built to surface unconscious and conscious biases towards representations, may not that tool itself be prone to the unconscious biases of its creators? In part due to this potential pitfall, USC’s student newspaper, the Daily Trojan, released an op-ed on the topic: “Viterbi must end its partnership with diversity spell check” (http://dailytrojan.com/2019/11/03/viterbi-must-end-its-partnership-with-diversity-spell-check/). There are mechanisms, however, to reduce this potential to build biases into the tool. Namely, extracting all normative or qualitative measures from the assessment can ensure a completely fact-based AI. And, one might argue that even though the tool might still include some bias, that this tool is making strides towards improving representation and has much less bias than a development process devoid of such analyses.

The other pitfall that this tool might face is a backlash against trying to quantify a purely creative product. That is, creators may eschew tools like this as they want full creative control and not be beholden to what the numbers say. A parallel here is if Netflix should create a new show purely based off an amalgamation of the best performing aspects of its existing content, creating a Frankenstein and purely fabricated storyline that the data (not the writer) would suggest. Conservative publication the Washington Examiner was quick to pick up on this critique, calling the GD-IQ: Spellcheck for Bias a “censor-bot” and argued that this was an attempt to rid creators of their license (https://www.washingtonexaminer.com/opinion/word-of-the-week-representation). However, as long as the tool is being used to inform, not mandate, writers on how to better tell stories and reflect the world we live in, it is a valuable resource.

CONCLUSION

To argue that GD-IQ robs the writer of her creativity or that it infuses even more bias into the creative development process is a misunderstanding of the tool itself. As long as underrepresentation exists in media, tools like this can be instrumental in surfacing the inherent inequalities of storylines and character descriptions. This is a groundbreaking and perhaps precedent-setting application of AI in creative industries, where it can be difficult to quantify something experienced or emotional.

Super pertinent problem and solution that could be applicable to a wide variety of industries! Even things as simple as Outlook/Gmail can have these “inclusion” checks that could change the representation bias that exists in the society today. HR policies and job postings is another key area which could use tools such as this one. My one concern (having developed this as an Outlook plugin during a hackathon) is the universally acceptable definitions of bias given these may differ drastically by culture. Especially in creative writing, a one-fit-for-all approach might not be the best one. Wondering if AI can solve this issue? The other issue in these type of recommendation systems built on Deep Learning / NLP is lack of explain-ability – why is this an issue? What do you think could be potential solutions here?

Thanks for the great article. It’s such a great initiative and agree that minority representation in the media/entertainment industry is a problem. Biases are often unconscious and may not even surface to the conscious side of the brain if nobody tells us that what we write, say, act may be wrong. This could create a vicious cycle especially in this industry as the less minority there are on TV or in movies, the fewer people in the minority group want want to go into the entertainment space in the first place. It’s great to see that leading players like Universal and Disney are engaging with the institute. I hope that they continue to seek and develop talented individuals regardless of their skin color.

Great article – thank you! While there is a lot of emphasis on the bias inherent in machine learning, I do think there is much less focus on applications such as the above where ML has the potential to reveal biases and lack of representation. In my mind, it is of course fair to question whether the algorithm itself is biased, but I still believe that having an additional checkpoint, regardless if that checkpoint might have some bias inherent in it as well, will help with trying to address and eradicate under representation in the media.

Super interesting idea! Thanks for sharing. I wonder whether and how this technology could be applied to create guidelines and learning materials for writers and executives, as much as to just flag existing content. Is there any way it could help create?

Love this unique way of applying technology! It’s great to hear they are taking into account factors within the script (status, # lines, types of lines) in the algorithm instead of purely looking at this from a numbers basis. This gives more comfort that they are thinking about misrepresentation more broadly and how that will affect generations that see the movies and content that Disney and Universal create.

I know this industry tends to be highly competitive (i.e. streaming war, battles over shows/actors, etc) so I hope an initiative like this can unite the industry together to address this issue.