Google AlphaGo: How a recreational program will change the world

AlphaGo program shocked the world by defeating Lee Sedol, the world champion in Go. This post talks about how Google used data and algorithms to achieve this and how a seemingly recreational program will create and capture value for Google

In March 2016, Google’s AlphaGo program defeated the world champion of board game “Go” [1]. Go is popular in China and Korea and is 2500 years old [Go game rules are here]. This was a watershed moment in Artificial Intelligence as experts had not expected a computer to defeat professional Go players for many more decades.

What is so special about Go compared to Chess?

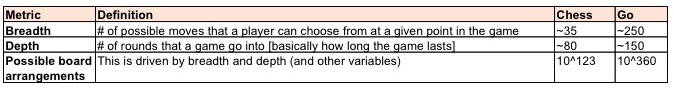

IBM’s Deep Blue computer defeated Chess Grandmaster Kasparov in 1996 [2]. However, Go is more complicated than chess. There are 2 metric that captures a game complexity:

Source: Blogwriter analysis and [2]

To give some perspective, the number of atoms in the universe = 10^80 [2]. Therefore, both chess and Go are almost impossible to beat using brute force. Go is also 200 times more difficult to solve than chess.

Data and algorithmic approach used by AlphaGo:

AlphaGo achieved this using a combination of data and algorithms

Large datasets:

The initial dataset for AlphaGo consisted of 30Mn board positions from 160,000 real-life games (Dataset A). This was divided into 2 parts – training and testing dataset. The training dataset was labelled (i.e. every board position corresponded to an eventual win or loss). AlphaGo then developed models to predict moves of a professional player. These models were tested on the testing dataset and the models correctly predicted the human move 57% of the time (far from perfect but prior algorithms had achieved a success rate of only 44%) [3].

AlphaGo also keeps playing against itself and generates even more data (Dataset B). It, thus, continues to generate and learn from more data and improves in performance.

Algorithms:

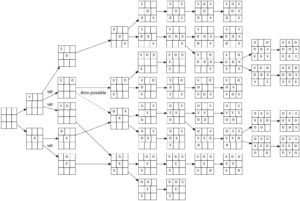

A game like Go is essentially deterministic i.e. since all the moves must follow certain rules, a powerful computer can develop a game tree (like the one shown for tic tac toe on the right) for all possible moves and then work backwards to identify the move that has the highest probability of success. Unfortunately, Go’s game tree is so large and branches out so much that it is impossible for a computer to do this calculation. [4]

AlphaGo identifies the best move by using 2 algorithms together. The first algorithm (Algorithm X) tries to reduce the breadth of the game while the second algorithm (Algorithm Y) reduces the depth of the game. Algorithm X comes up with possible moves for AlphaGo to play while algorithm Y attaches a value to each of these moves. This eliminates a number of moves that would be impractical (i.e. for which the probability of winning would be almost zero) and, thus, focuses the machine’s computational power on moves with higher winning probability

Source: https://commons.wikimedia.org/wiki/File:Tic-tac-toe-full-game-tree-x-rational.jpg

The following schematic explains how AlphaGo uses this combination of data and algorithms to win [Note: this schematic is very bare bones and gives a very high level overview of how AlphaGo works]

Source: Blogwriter

What value does a “recreational” algorithm create?

AlphaGo’s value creation is beyond just its capability to solve a board game. Google acquired DeepMind (creator of AlphaGo) in 2014 for $500Mn [5] and, thus, clearly sees value in a seemingly recreational program. IBM’s Watson is a great example of recreational programs becoming mainstream technologies. Watson started off as a computer to play jeopardy but now employs more than 10,000 employees [6] and is being used in healthcare, digital assistants etc.

The advantage of AlphaGo is that it’s algorithms are general purpose and not specific to Go [2]. It would be comparatively easy for Google to customize the algorithms to solve other AI challenges as well. The data that AlphaGo generates or has collected is not useful for other application but the algorithms that power the machines are. Google is already using elements of AlphaGo for incremental improvements in its products like search, image recognition (automatic tagging of images inside Google Photos), Google assistant [7].

How will Google capture value from this?

- Indirect value capture: AlphaGo’s algorithm is already improving Google’s products (like Search, Photos and Assistant). Better Google products –> More engaged users –> More ad revenue for Google

- Direct value capture: In the future, Google can sell learning and computation service (built on top of AlphaGo’s algorithm) to other firms. IBM Watson has already on-boarded more than 100 businesses onto its platform [8] and the Cognitive Inference division of IBM (that includes Watson) had revenue of $5Bn in Q4 2016 [9]. Google can, therefore, license this technology to products like Siri in the future

Looking ahead

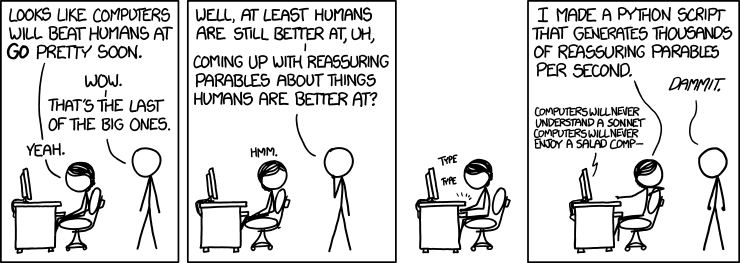

Google achieved the holy grail in Artificial Intelligence by developing AlphaGo. Its investment in these algorithms (and a seemingly worthless attempt to win at a board game) will pay rich dividends by improving its products like search, Photos, Assistant and self-driving cars as well as by solving other big problems in healthcare, manufacturing etc.

Source: https://xkcd.com/1263/

*******************************************************************************************************************************

Sources:

[1] https://www.theatlantic.com/technology/archive/2016/03/the-invisible-opponent/475611/

[2] https://www.scientificamerican.com/article/how-the-computer-beat-the-go-master/

[3] https://blog.google/topics/machine-learning/alphago-machine-learning-game-go/

[4] https://www.tastehit.com/blog/google-deepmind-alphago-how-it-works/

[5] https://techcrunch.com/2014/01/26/google-deepmind/

[6] https://www.nytimes.com/2016/10/17/technology/ibm-is-counting-on-its-bet-on-watson-and-paying-big-money-for-it.html

[7] http://www.theverge.com/2016/3/14/11219258/google-deepmind-alphago-go-challenge-ai-future

[8] https://bits.blogs.nytimes.com/2014/10/07/ibms-watson-starts-a-parade/

[9] https://www.theregister.co.uk/2017/01/20/its_elementary_ibm_when_is_watson_going_to_make_some_money/

Extremely lucid explanation of a very complex topic Bipul, thanks!

1. One thing that I am curious is how can startups today even think of disrupting Microsoft, Google, Amazon etc – essentially the big players in artificial intelligence? Since most AI algorithms require a large amount of data and compute power to improve themselves would not the big players always be the winners in the end?

2. Secondly, during my summer I was interacting with a very senior engineer and he told me that most of the code we write to develop applications today will become a commodity in the next 10-15 years. Computers will be too smart and write their own code using AI. Only very specialized code would be written by human beings. In such a world where AI is so dominant and pervasive how do you see other players apart from a few capturing value?

Hi Sidharth. Thanks for your compliments. My answers are below:

Answer 1:

I think there are still ways for startups to disrupt big companies. Take AlphaGo for example. While data was needed for training and testing the AI models, once the training/testing was completed, the algorithms were more important. The training data that AlphaGo used was historical data about Go board positions, which is not that difficult to obtain. The advantage that AlphaGo and some of the other big companies is that they enjoy first mover advantage in creating even more data, which is proprietary and not publicly available. For e.g. AlphaGo played against itself to generate even more board positions data (which is proprietary). Thus, even startups can compete if they invest in developing these technologies early.

Regarding compute power: Better designed algorithms can actually work on less compute power. For e.g. AlphaGo makes less computations per second than Deep Blue (which was used to defeat Kasparov at chess some 20 years back). Also another version of AlphaGo was released and that version ran on many more computers. However, the performance improvement seen in the distributed version of AlphaGo was not that much (indicating that the algorithms are not constrained by the computer’s computing power). The distributed version of AlphaGo ran on 1202 CPUs while the original AlphaGo ran on 48 CPUs. The distributed version had a rating of 3140 while the original version had a rating of 2890

Answer 2:

I think in such a scenario, in which machines are writing a lot of code, the field will become even more open for other players. The process of code writing would be democratized and available to startups and smaller firms. Hence, I feel that smaller players might be able to capture a bigger share of the pie